Robust Reference-based Super-Resolution via C2-Matching

Abstract

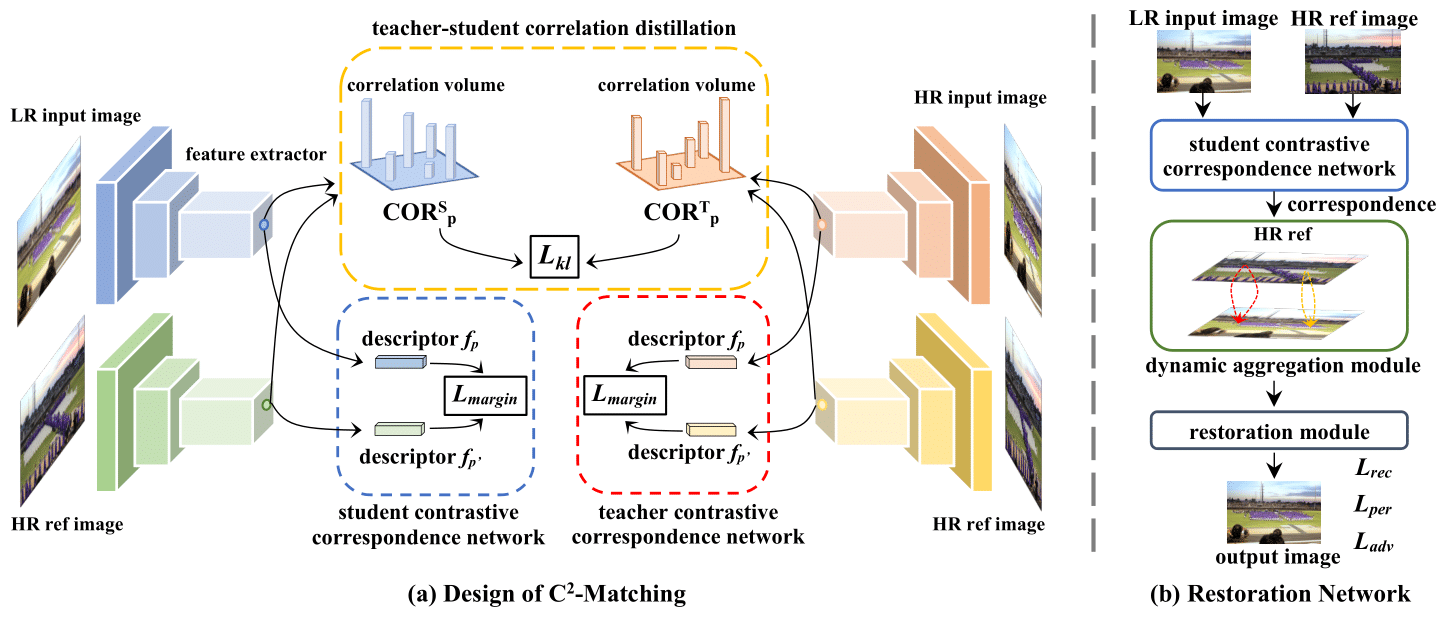

Reference-based Super-Resolution (Ref-SR) has recently emerged as a promising paradigm to enhance a low-resolution (LR) input image by introducing an additional high-resolution (HR) reference image. Existing Ref-SR methods mostly rely on implicit correspondence matching to borrow HR textures from reference images to compensate for the information loss in input images. However, performing local transfer is difficult because of two gaps between input and reference images: the transformation gap (e.g. scale and rotation) and the resolution gap (e.g. HR and LR). To tackle these challenges, we propose C2-Matching in this work, which produces explicit robust matching crossing transformation and resolution. 1) For the transformation gap, we propose a contrastive correspondence network, which learns transformation-robust correspondences using augmented views of the input image. 2) For the resolution gap, we adopt a teacher-student correlation distillation, which distills knowledge from the easier HR-HR matching to guide the more ambiguous LR-HR matching. 3) Finally, we design a dynamic aggregation module to address the potential misalignment issue. In addition, to faithfully evaluate the performance of Ref-SR under a realistic setting, we contribute the Webly-Referenced SR (WR-SR) dataset, mimicking the practical usage scenario. Extensive experiments demonstrate that our proposed C2-Matching significantly outperforms state of the arts by over 1dB on the standard CUFED5 benchmark. Notably, it also shows great generalizability on WR-SR dataset as well as robustness across large scale and rotation transformations.

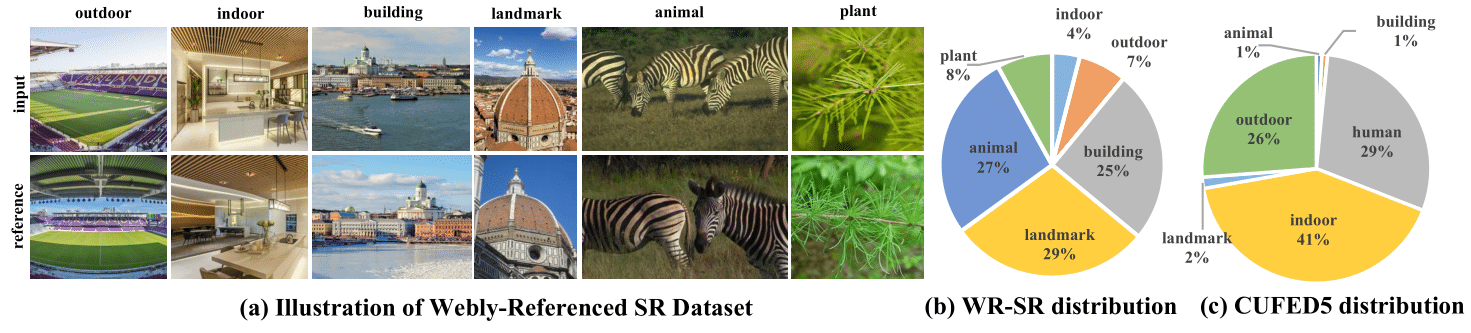

Webly-Referenced SR datasets

Our Webly-Referenced SR (WR-SR) dataset is 1) collected in a more realistic way and 2) more diverse. Here we show some examples of the WR-SR dataset.

You can download the WR-SR dataset and the baseline methods results through the following link.

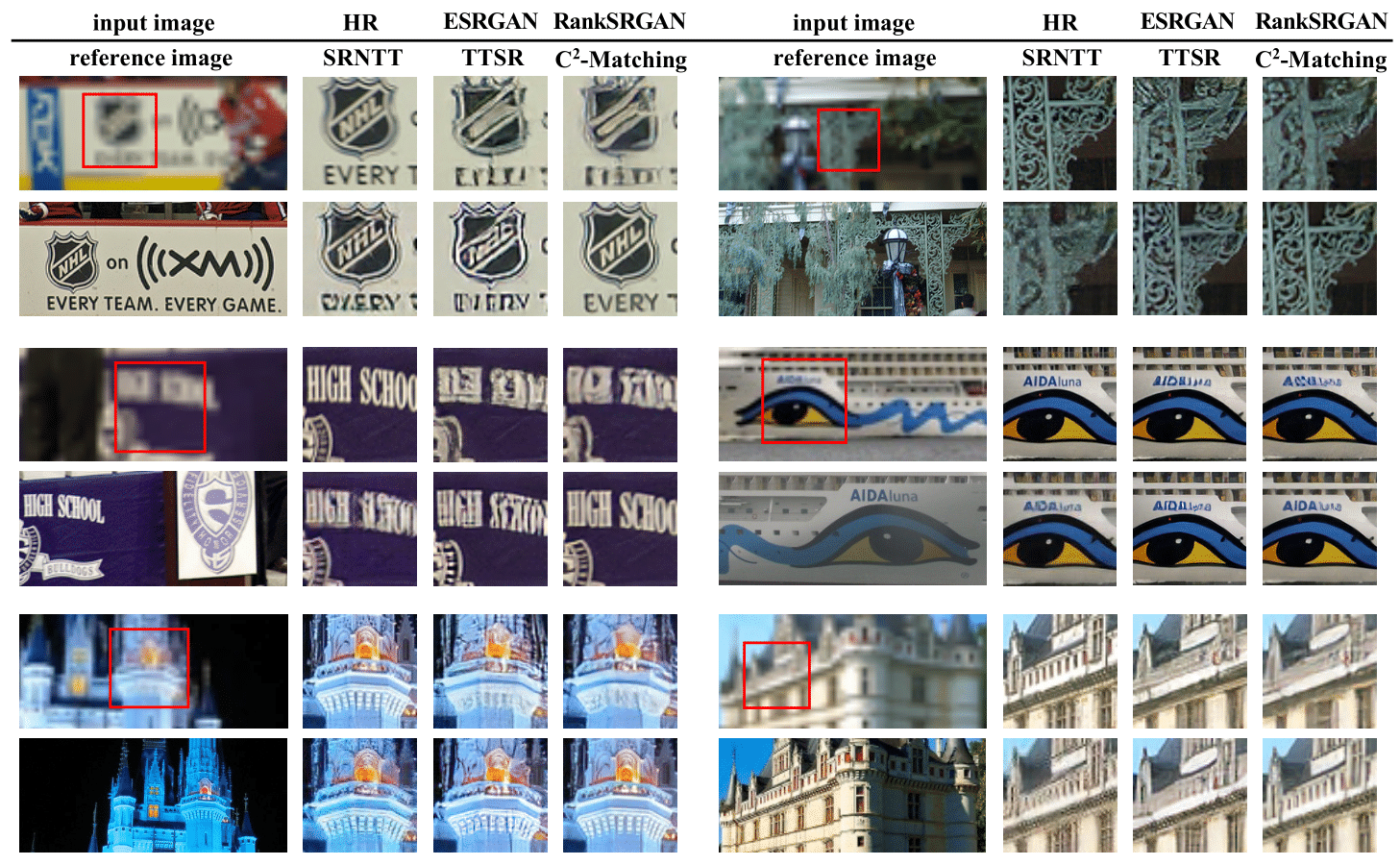

Visual Results

Here we show some visual comparisons with state-of-the-art methods.

For more results on the benchmarks, you can directly download our C2-Matching results from the following link:

Materials

Code and Models

Citation

@InProceedings{jiang2021c2matching,

author = {Yuming Jiang and Kelvin C.K. Chan and Xintao Wang and Chen Change Loy and Ziwei Liu},

title = {Robust Reference-based Super-Resolution via C2-Matching},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021}

}